A key aspect of optimizing battery run-time on battery

powered mobile devices is measuring and analyzing their current drain to gain

greater insight on how the device is making use of its battery power and then

how to make better use of it. I went into a bit of detail on this in a previous

posting, “Using Current Drain Measurements to Optimize Battery Run-time of

Mobile Devices”.

A second aspect of optimizing battery run-time is making

certain you are making optimum use of the battery powering the device. This

starts with understanding and validating the battery’s stated capacity and

energy ratings. Simply assuming the battery meets or exceeds its stated ratings

without validating them is bound to leave you coming up shorter than expected

on run-time. It is critical that you

validate them per the manufacturer’s recommended conditions. This serves as a

starting point of finding out what you can ultimately expect from the battery

you intend to use in your device. More than likely constraints imposed by the

nature of your device and its operating conditions and requirements will

further reduce the amount of capacity you can expect from the battery in actual

use.

A battery’s capacity rating is the total amount of charge

the battery can deliver. It is product of the current it can deliver over time,

stated as ampere-hours (Ah) or miiliampere-hours (mAh). Alternately the charge rating

is also stated as coulombs (C), where:

·

1 coulomb (C)= 1 ampere-sec

·

1 ampere-hour (Ah)= 3,600 coulombs

A battery’s energy rating is the total amount of energy

the battery can deliver. It is the product of the power it can deliver over

time, stated as watt-hours (Wh) or milliwatt-hours (mWh). It is also the

product of the battery’s capacity (Ah) and voltage (V). Alternately the energy

rating is also stated as joules (J) where:

·

1 joule (J)= 1 watt-second

·

1 watt-hour (Wh) = 3,600 joules

One more fundamental parameter relating to a battery’s

capacity and energy ratings is the C rate or charge (or discharge) rate. This

is the ratio of the level of current being furnished (or drawn from, when discharging)

the battery, to the battery’s capacity, where:

·

C rate (C) = current (A) / (capacity (Ah)

·

C rate (C) = 1 / charge or discharge time

It is interesting to note while “C” is used to designate

units of C rate, the units are actually 1/h or h-1. The type of

battery and its design has a large impact on the battery’s C rate. Batteries

for power tools have a high C rate capability of 10C or greater, for example,

as they need to deliver high levels of power over short periods of time. More

often however is that many batteries used in portable wireless mobile devices

need to run for considerably longer and they utilize batteries having

relatively low C rates. A battery’s capacity is validated with a C rate

considerably lower than it is capable of as when the C rate is increased the

capacity drops due to losses within the battery itself.

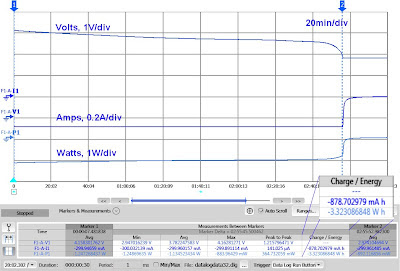

Validating a battery’s capacity and energy ratings

requires logging the battery’s voltage and current over an extended period of

time, most often with a regulated constant current load. An example of this for

a lithium ion cell is shown in Figure 1 below. Capacity was found to be 12% lower than its rating.